Doppelgänger V - FEED

#XR #Immersive Storytelling #Spatial Soundscape #Installation

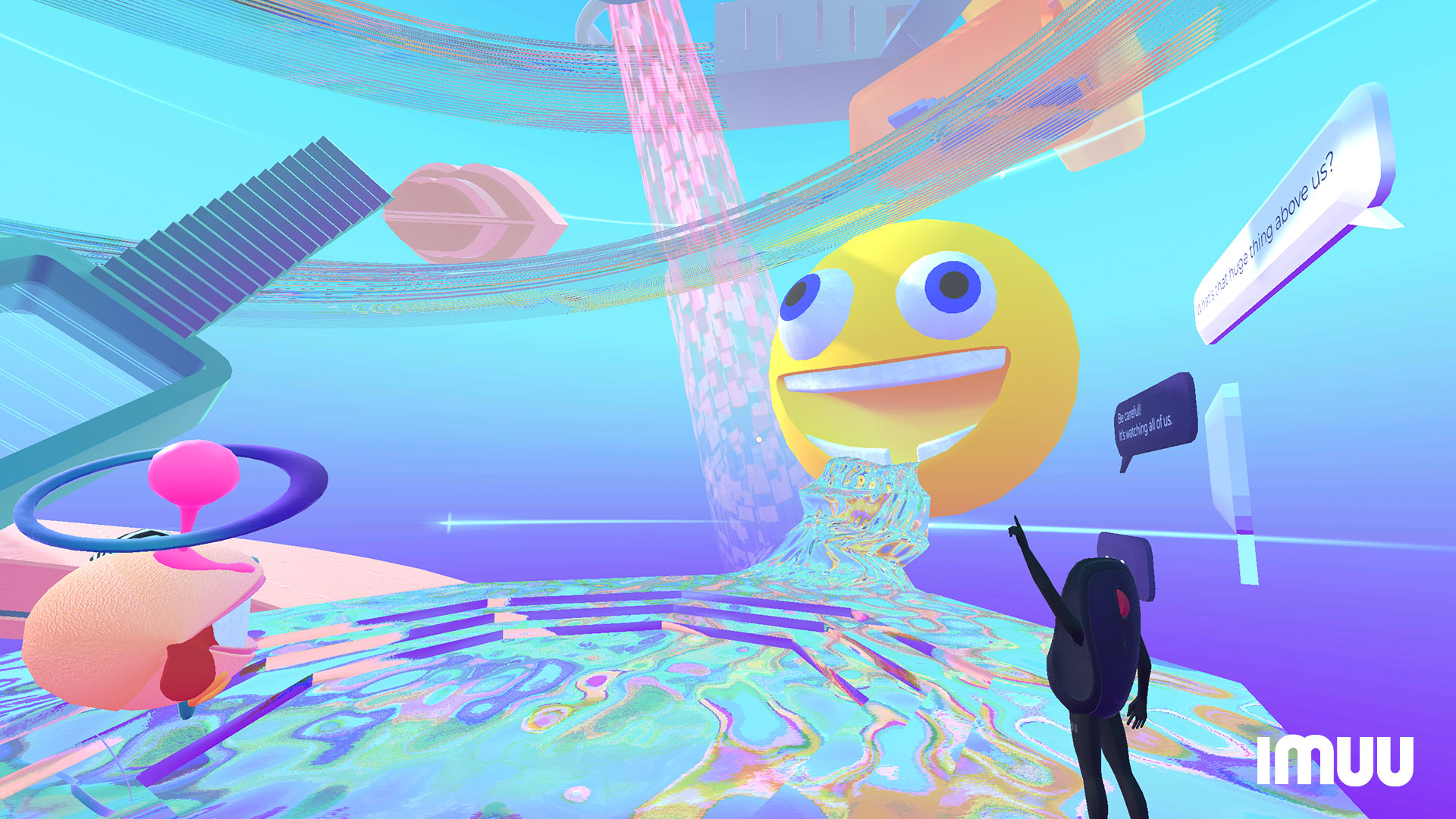

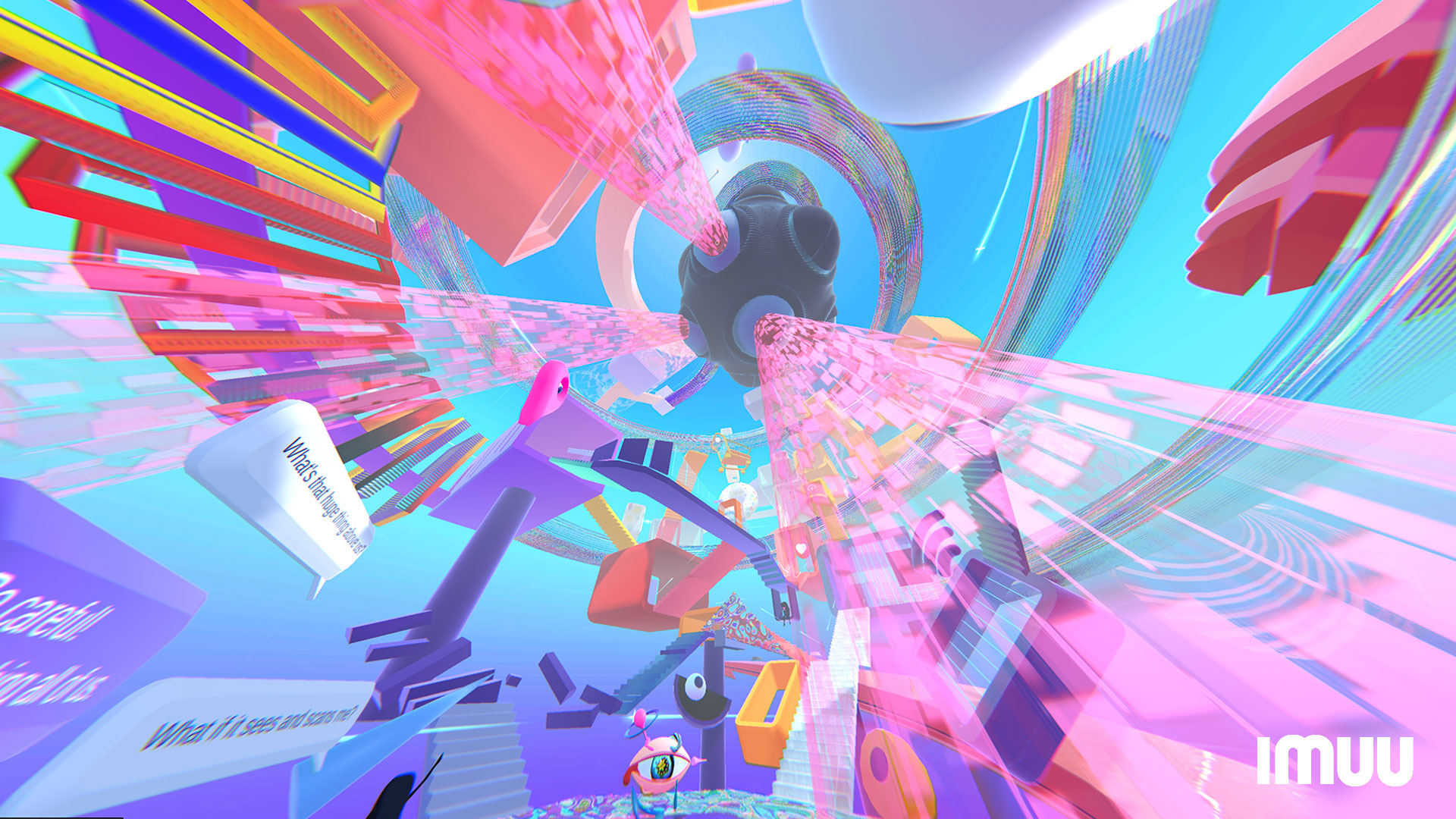

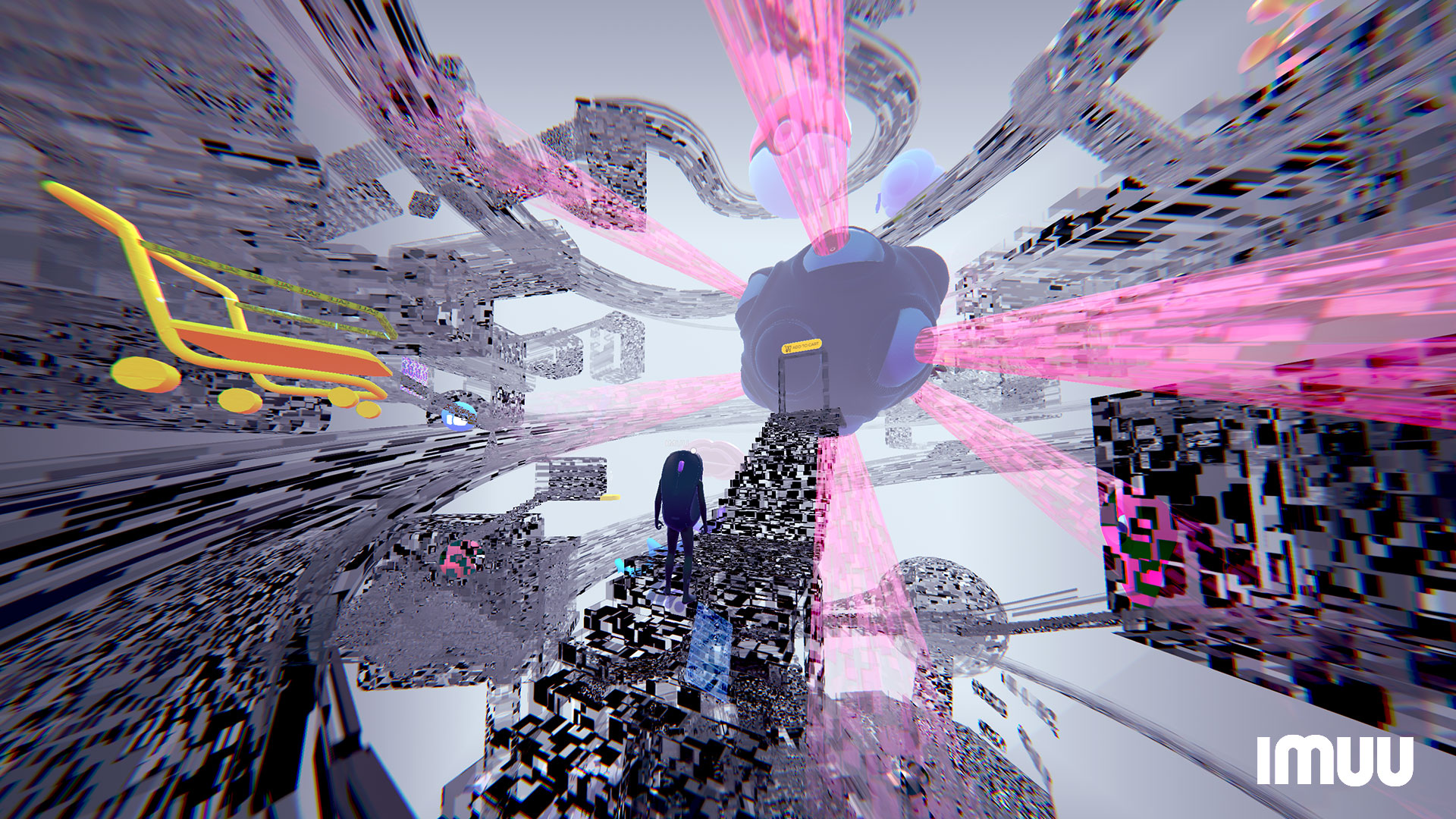

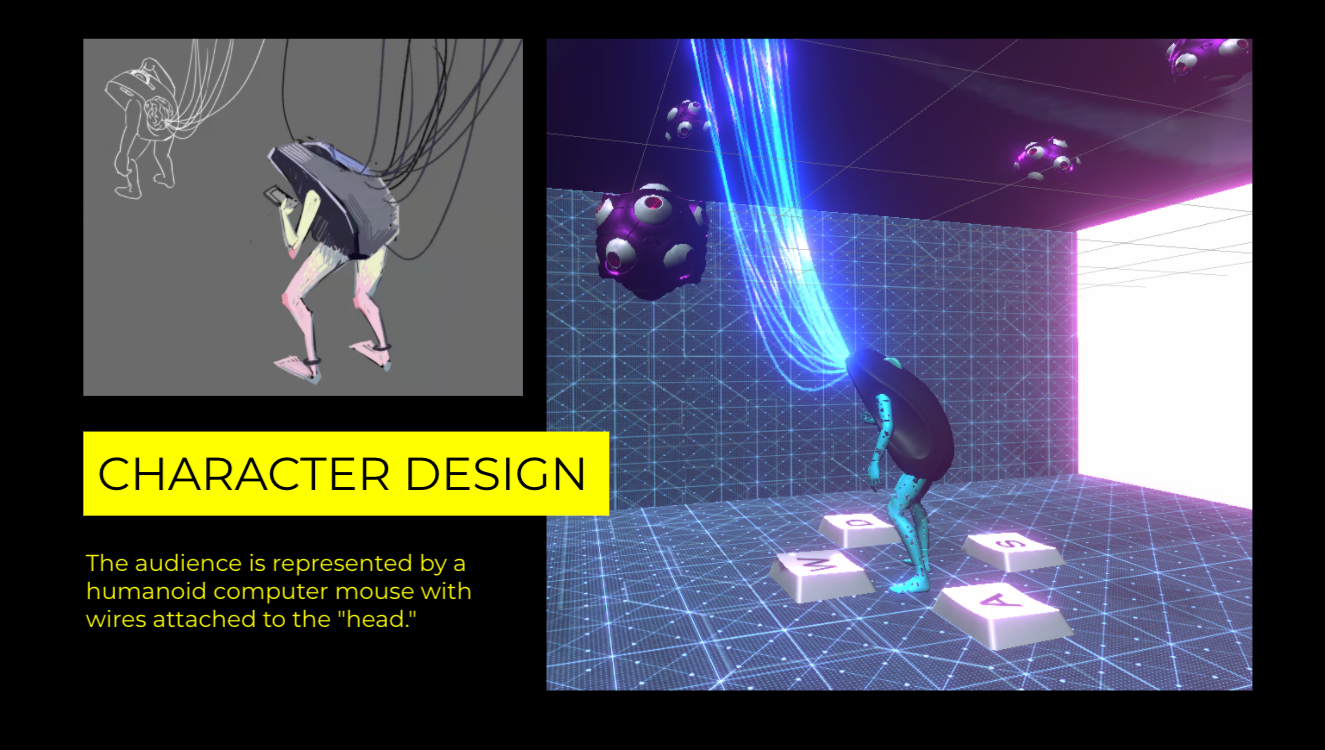

Doppelgänger V - FEED is an interactive installation that explores the notion of the self and the fragmented existence of bodies and sensorium. Combining video game elements, spatial soundscape, and transmedia storytelling, FEED 3.0 takes the audience on an immersive audiovisual journey in a panopticon-like complex. While a tracking apparatus constantly scans and monitors everything in the space as if an autonomous being with many eyes, the audience plays the role of a computer mouse, a curious listener and user walking on a long scrolling feed, passing by various characters in their internet cubicles, and potentially getting lost in this internet labyrinth full of joyful colors and sound events.

This idea was initially inspired by our living experience in a confined space and over-extended digital presence during the pandemic. While our bodies, sitting still, staring at many many screens, function as computer mice and cursors. Our existence manifests through digital walking between digital objects and spaces, forming fragmented external "body".

Exhibitions

- International Symposium on Electronic Art (ISEA 2024) Brisbane, Australia

-

SAT Fest 2024 (Best Narrative Work Award) Montreal, Canada

-

Fulldome Festival 2024 Jena, Germany

-

CyberArts Waveforms 2023 at Museum of Science Boston, USA

-

Interdisciplinary Conference on Musical Media at Harvard 2023 Boston, USA

-

The Biennale at Multimedia Art Museum Moscow (MAMM), 2022 Moscow,

-

Russia

Ars Electronica x RIXC, 2021 Linz, Austria

-

NYC Media Lab x CHANEL Synthetic Media & Storytelling Challenge 2021 New York + Online

- Non Event Series 2021 Online